Summary

Imagine leaving your house unlocked because you thought “only my friends visit.” That’s what Langflow did with its code validation endpoint.

Langflow (before version 1.3.0) had a feature that allowed people to submit Python code snippets so the server could “check” them. Unfortunately, the way this was built meant the server wasn’t just checking the code, it was actually running pieces of it. And since this endpoint didn’t even require authentication in many setups, anyone on the internet could send a payload and trick Langflow into running arbitrary Python commands.

That’s CVE-2025-3248, a critical unauthenticated remote code execution (RCE) vulnerability with a CVSS of 9.8.

Technical Details

Langflow wanted to be helpful. Developers writing Python-powered “components” could send their code to the server, and the server would:

- Parse it with ast.parse()

- This converted the submitted code into an Abstract Syntax Tree (AST). At this stage, nothing dangerous happens — parsing is safe because it’s just building a tree of Python constructs.

- Compile it with Python’s compile()

- The AST was then turned into Python bytecode. This is where risk starts creeping in because the code is now executable, even if it hasn’t been run yet.

- and exec() to “validate” it

- Finally, Langflow used exec() on the compiled code to “evaluate” function definitions and decorators, and even attempted to load modules using importlib.import_module().

On paper, this sounds like a way to prevent broken code from sneaking in.

The Fatal Mistake:

The dangerous part is how Python works under the hood. When you define a function:

- Any decorators (@something) are evaluated immediately. If you wrote @do_evil(), that function is called the moment the def statement runs.

- Any default parameter values are also evaluated immediately. So writing def foo(x=os.system("rm -rf /")): would actually execute the system command right at definition time.

By using exec() on untrusted code, Langflow wasn’t just “peeking” at the function’s structure — it was running these hidden expressions.

So what was vulnerable?

The /api/v1/validate/code endpoint. It took whatever Python code was sent, parsed it, compiled it, and executed parts of it during validation. Since decorators and defaults run at definition time, attackers had a perfect opportunity to slip in malicious expressions.

Attackers could:

- Run arbitrary OS commands (steal files, drop web shells, reverse shells).

- Harvest secrets from environment variables (API keys, tokens).

- Use Langflow’s access to pivot further inside networks.

Exploitation

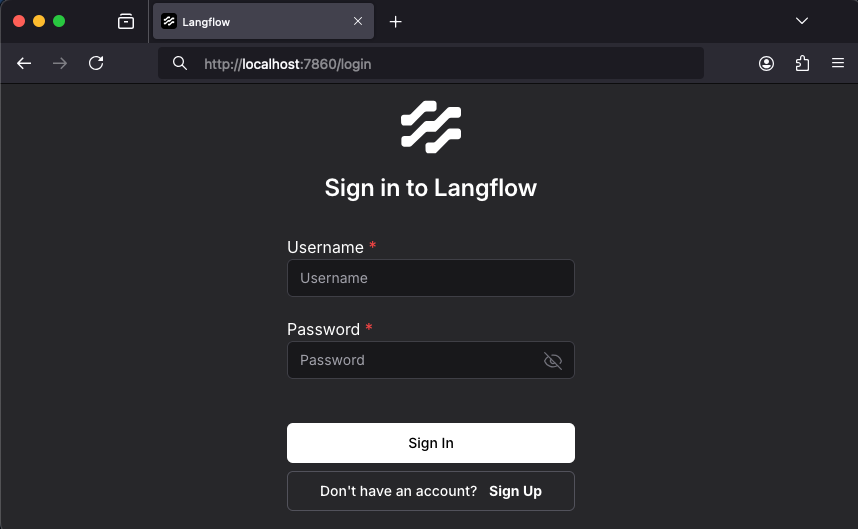

To demonstrate the vulnerability, we set up a local Langflow instance.

Step 1: Spin up Langflow in a local test environment (never directly in production) and access it via http://localhost:7860/. You can use Docker for ease.

Step 2: Through BurpSuite, capture any request from the Langflow interface and send it to the Repeater tab. From here, we can manually craft a malicious request to the vulnerable endpoint.

Step 3: Craft the malicious request

We target the /api/v1/validate/code endpoint, which accepts Python code in JSON format. By injecting an expression inside a decorator (@exec(...)), we can smuggle in OS commands.

Example Malicious Request:

Here’s what happens:

- The decorator @exec(...) runs during function definition.

- It executes Python’s subprocess.check_output(['id']), which runs the Linux id command.

- The output is raised as an exception, allowing the response to contain the command result.

Step 4: Send the malicious request and observe the server response, which will display the output of the executed command. That confirms the injected code was executed on the host system.

From here, attackers could easily replace ['id'] with more destructive commands, or even a one-liner reverse shell, gaining full interactive access to the host. This is why the bug is so dangerous when exposed to the internet.

Mitigation

- Upgrade immediately to Langflow 1.3.0+. This version removes the dangerous code validation path and requires authentication.

- Don’t expose Langflow to the internet. Put it behind a VPN, reverse proxy, or zero-trust access.

- Run Langflow under a non-root, restricted account.

- Never exec() user code. Parsing is fine. Executing is a trap.

- If execution is truly needed, sandbox it. Use containers, seccomp, or separate microservices with no secrets.

Reference

- Horizon3: Unsafe at Any Speed — Abusing Python exec for Unauth RCE in Langflow AI

- Zscaler ThreatLabz: CVE-2025-3248: RCE Vulnerability in Langflow

.png)